Google might not be as responsible for the spread of fake news as social media, but the search giant is still doing something about it: burying known sources of fake news, and letting users weigh in, too.

Google frequently tweaks the algorithms that return relevant search results, sometimes privately, and other times publicly. In this case, Google announced Tuesday that about 0.25 percent of all daily search results have been returning “offensive or clearly misleading content,” and those results will be pushed lower in search results in favor of more authoritative results.

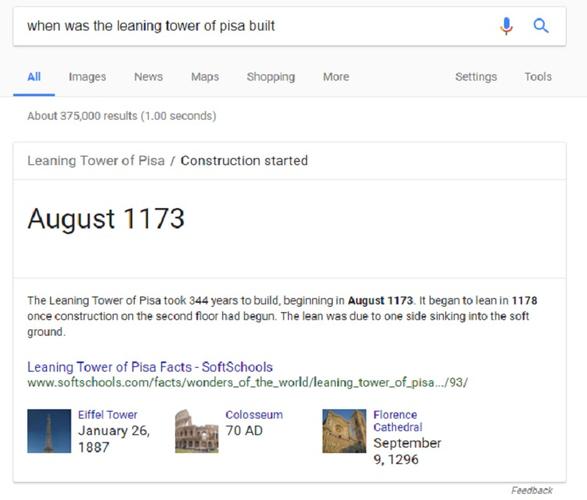

Google is also implementing Feedback links for users to report on the accuracy of autocomplete search queries and Featured Snippet text.

Deliberately misleading information, as well as outright fabrications, plagued the 2016 U.S. presidential primary season and election. Numerous sources found that sites purporting to be legitimate news services were simply making up “news” stories for profit, or as propaganda, and sharing them on social media sites like Facebook. Politicians on both sides used “fake news” as a rallying cry, and studies, such as this one from Stanford University, found that students were increasingly unable to tell the difference between a legitimate news story and a fabricated one.

The problem, according to Google, is that 15 percent of all daily searches are brand new. Google can easily know the answer to a query like “Is the earth flat?” But if it’s a query the search engine hasn’t seen before, it has fewer sources of context to rely on.

Google

Google What Google’s feedback will look like for autosuggested searches.

Why this matters: Google’s efforts should be applauded, but it’s unclear what difference this will make in the long run. The problem isn’t so much that users are searching for fake news; it’s that they’re being force-fed a steady diet of propaganda from friends, contacts, and fake-news sites they’ve already bought into. Facebook tried to solve the problem last fall by implementing a verification mechanisms to combat fake news, but it’s not clear that it’s working.

Letting users help decide what’s fake, offensive

Last fall, Google announced plans to attach a “Fact Check” label to search results, which it began rolling out in April. Fact Check attempts to adjudicate whether the information in question is truly accurate, using sources like Snopes.com. Still, Fact Check was a response to users who were already searching for a given news report or “fact." Google’s latest tweak makes erroneous results harder to find.

“We’ve adjusted our signals to help surface more authoritative pages and demote low-quality content, so that issues similar to the Holocaust denial results that we saw back in December are less likely to appear,” Ben Gomes, Google’s vice president of engineering for search, wrote.

Google

Google Feedback for Featured Snippets lets users identify possible fake news.

That effort will be assisted by what Google calls Search Quality Raters, real people who assess the quality of Google’s search results. While those people don’t affect search results in real time, they do provide feedback on whether the changes to the algorithms are working, Gomes wrote.

More direct input from users themselves will come via new Feedback links attached to autocompleted searches and Featured Snippets. These links are tiny, a gray bit of text at the bottom of the autocomplete bar or the snippet itself. Clicking them, however, allows users to provide direct feedback. In the example Google provided, users can mark checkboxes to state whether a suggested search was hateful or sexually explicit. (Google doesn’t allow autocompleted queries to be marked as inaccurate, since they’re presumably questions.)

Google’s Featured Snippets evolved as quick synopses of the answers users were searching for, such as when the Leaning Tower of Piza was built. Now users can assess the snippet with anything from “I don’t like this” to “This is harmful, dangerous, or violent” to “This is misleading or inaccurate.”

It’s not clear what happens when an autocompleted search or snippet is marked as inaccurate or inoffensive, however. (We’ve reached out to Google, but representatives didn’t immediately respond.) Will Google’s algorithms take over, or will an actual human make the call? Reporting real information as fake could have negative effects, too. As we’ve learned over the past months, “fake news” is often in the eye of the beholder.

Join the CIO Australia group on LinkedIn. The group is open to CIOs, IT Directors, COOs, CTOs and senior IT managers.